By now (as at time of writing in July November December 2019), unless you have been living under the rock for the past couple of years, you would be no stranger to the buzz about 5G telecommunication technology. In fact, as alluded in my 2018 year-ender post, 5G trials have been conducted in many places; to update on that, South Korea’s 5G services went live in April 2019 thus becoming one of the first countries to roll out 5G, while selected cities in the UK and US have seen 5G services provided by telco companies. Even more so, there are talks that the development of 6G is underway, even when 5G has yet to see mass-scale rollout.

Even so, given that there is little to know about 6G at the moment, and that 5G is still very much relevant, a brief discussion on 5G technology is therefore warranted in my opinion.

The Subject

What is 5G? Common sense and logic would make the sensible inference that 5G is an upgrade from 4G, which was an evolution from its predecessor, 3G – and so on. (After all, it is named such because it is the fifth generation of wireless network technology).

In fact, most introductory materials on 5G would at least do a short recap on how mobile wireless telecommunication technology advanced from one “G” to the other. The first generation of such technology was developed in the late 1970s and 1980s where analog radio waves carry unencrypted voice data – WIRED chronicled that anyone with off-the-shelf components could listen in on conversations.

Later on, the second “G” was developed in the 1990s which not only made voice calls safer, but provided more efficient data transfers via its digital systems. The third generation increased the bandwidth for data transfers which then allowed for simple video streaming and mobile web access – this ushered in the smartphone evolution as we know it. 4G came along in the 2010s with the exponential rise of the app economy driven by the likes of Google, Apple and Facebook.

The development of 5G was rather a longtime coming though – core specifications began in 2011, and would not complete until some 7 years later. But what does 5G entail?

The Pros and Problems

From the onset, 5G promised greater amount of connectivity speed – around 10 gigabits per second to be exact (about 1.25 gigabytes per second). At such (theoretical) speeds, they are 600 times faster than average 4G speeds on today’s mobile phones. That being said, current 5G tests and roll-outs showed that 5G speeds in practice would vary around 200 to 400 megabits per second. One test that is noteworthy would be Verizon’s 5G coverage in Chicago, where download speeds have shown to reach nearly 1.4 gigabits per second – the same may not be the case for upload speeds, and that the other major caveat concerned is the limited signal range.

5G primarily uses millimeter wave technology (with the range of the wireless spectrum between 30 GHz and 300 GHz), where it can transmit data at higher frequency and faster speeds, but also faces the major drawback of reliability in covering distances due to its extremely short wavelength and tiny wave form. With a dash of humour, this resulted in TechRadar having to perform a “5G shuffle” dance around the 5G network node when testing out the high speed in Chicago. On a more concrete note, this exemplifies the difficulty of rolling out 5G arising from the need to deploy massive amounts of network points. This meant that new network infrastructure may be required, vastly different from the existing ones which support 4G and prior network technology.

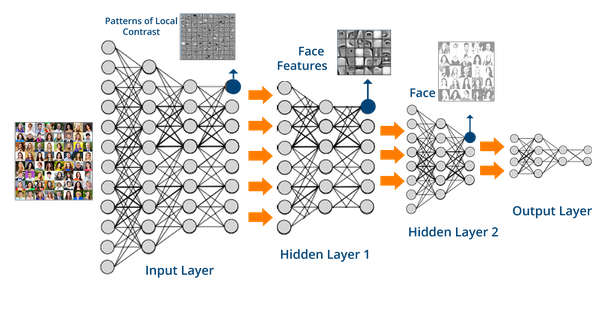

But like how we have overcome the various adversities and challenges in technology, assuming we can figure out and execute a viable deployment of 5G network (which is not a very futuristic assumption at the current rate of progress), 5G also entails greater number of devices being served at nearly real-time. This meant accelerated advancement in the field of Internet of Things, where more internet-connected devices and sensors can provide real-time data and execution – benefiting consumers and more so for various industries. This also meant autonomous vehicles, which heavily relies on real-time internet connectivity, would probably see the light of day in terms of realisation and adoption.

However, the conversations about 5G in the past couple of years have been on the “whos” and “hows” of infrastructure deployment. On this front, Chinese companies had reportedly had outpaced American counterparts in perfecting 5G network hardware capabilities. While China’s rate of technological advancement should not come as a surprise to anyone anymore, the achievements came in the light of recent concerns that these Chinese companies were involved in state-backed surveillance activities by introducing backdoors in its network equipment. The governments in the UK and US were grappling over the 5G infrastructure dilemma: to use infrastructure from Chinese companies with the risk of creating vulnerabilities to a foreign power, or to develop one’s own infrastructure which takes time and may not catch up with the economic powerhouse in time.

The other issue about the impending 5G roll-out worldwide surrounds the problem of consumer device compatibility. Similar to the times where 4G was first introduced, currently (in 2019) there are very limited number of devices with 5G network capabilities. And among those that do have such capabilities, CNET reported that these phones are limited to the millimeter wave spectrum band currently accessible for 5G, and are not open to other spectrum bands if and when they are incorporated with 5G in the future. The problem of device compatibility may evolve and resolve as 5G deployment goes on.

The Takeaways

The discussion about 5G is quite overdue, in the sense that mass roll-out of such infrastructure is underway and in the works, albeit according to the various timeframes each country has set. In fact, Malaysia is set to deploy 5G as early as second half of 2020 according to one report. It is then imperative, for industries to explore how 5G technology may be leveraged to achieve greater efficiencies and effectiveness.

And whilst 5G is just about to take off, there are already discussions about 6G, with research on the sixth generation of network technology being initiated by the Chinese government, a Finnish university, and companies such as Samsung. And going on how long each generation’s technology require to be developed, we would probably see 6G taking shape by the next decade of 2030.

As to answering the question set out in the title: is 5G a deal-breaker? Yes, but it was not in 2019, and maybe not yet in 2020. Mass infrastructure deployment must be complemented with industrial applications and use-cases in order to fully reap the potential 5G possess. But as how we have observed in previous trends of technology, this will fall in place inevitably – it is only a question of “when” and “how soon”.

With that, I shall end this post, which serves as the last post for the year (and the decade, if you are of the opinion that decades should begin with a 0 instead of 1).

And if I intend to continue writing this blog, see you in the next year (and the decade).